https://www.softwaretestinghelp.com/what-is-software-testing-life-cycle-stlc/

#4. Design Phase:

This phase defines “HOW” to test. This phase involves the following tasks:

– Detail the test condition. Break down the test conditions into multiple sub-conditions to increase coverage.

– Identify and get the test data

– Identify and set up the test environment.

– Create the requirement traceability metrics

– Create test coverage metrics.

#5. Implementation Phase:

The major task in this STLC phase is of creation of the detailed test cases. Prioritize the test cases also identify which test case will become part of the regression suite. Before finalizing the test case, It is important to carry out the review to ensure the correctness of the test cases. Also, don’t forget to take the sign off of the test cases before actual execution starts.

If your project involves automation, identify the candidate test cases for automation and proceed for scripting the test cases. Don’t forget to review them

https://www.guru99.com/software-testing-life-cycle.html

Requirement Analysis

During this phase, test team studies the requirements from a testing point of view to identify the testable requirements.

The QA team may interact with various stakeholders (Client, Business Analyst, Technical Leads, System Architects etc) to understand the requirements in detail.

Requirements could be either Functional (defining what the software must do) or Non Functional (defining system performance /security availability )

Automation feasibility for the given testing project is also done in this stage.

Activities

- Identify types of tests to be performed.

- Gather details about testing priorities and focus.

- Prepare Requirement Traceability Matrix (RTM).

- Identify test environment details where testing is supposed to be carried out.

- Automation feasibility analysis (if required).

Deliverables

- RTM

- Automation feasibility report. (if applicable)

https://testfort.com/blog/7-stages-of-testing-life-cycle

s any other complex process, software testing consists of different stages and each of them is represented by a specific range of activities. Unlike methods of testing, its stages remain quite the same and include 7 different activities.

What are the stages in testing life cycle?

Testing Stage 1 – Test Plan

Software testing should always begin with establishing a well thought-out test plan to ensure an efficient execution of entire testing process. Efficient test plan must include clauses concerning the amount of work to be done, deadlines and milestones to be met, methods of testing and other formalities like contingencies and risks.

Testing Stage 2 – Analysis

At this stage, functional validation matrix is made. In-house or offshore testing team analyzes the requirements and test cases which are to be automated and which are to be tested manually.

Testing Stage 3 – Design

If the testing team has reached this stage it means that there is no confusion or misunderstanding concerning the test plan, validation matrix or test cases. At Designing Stage testing team makes suitable scripts for automated test cases and generates test data for both automated and manual test cases.

While reviewing test cases, testers should consider suitability for automation. The team needs to automate as many tests as possible from previous and current iterations. This allows automated regression teststo reduce regression risk with less effort than manual regression testing would require. This reduced regression test effort frees the testers to more thoroughly test new features and functions in the current iteration.

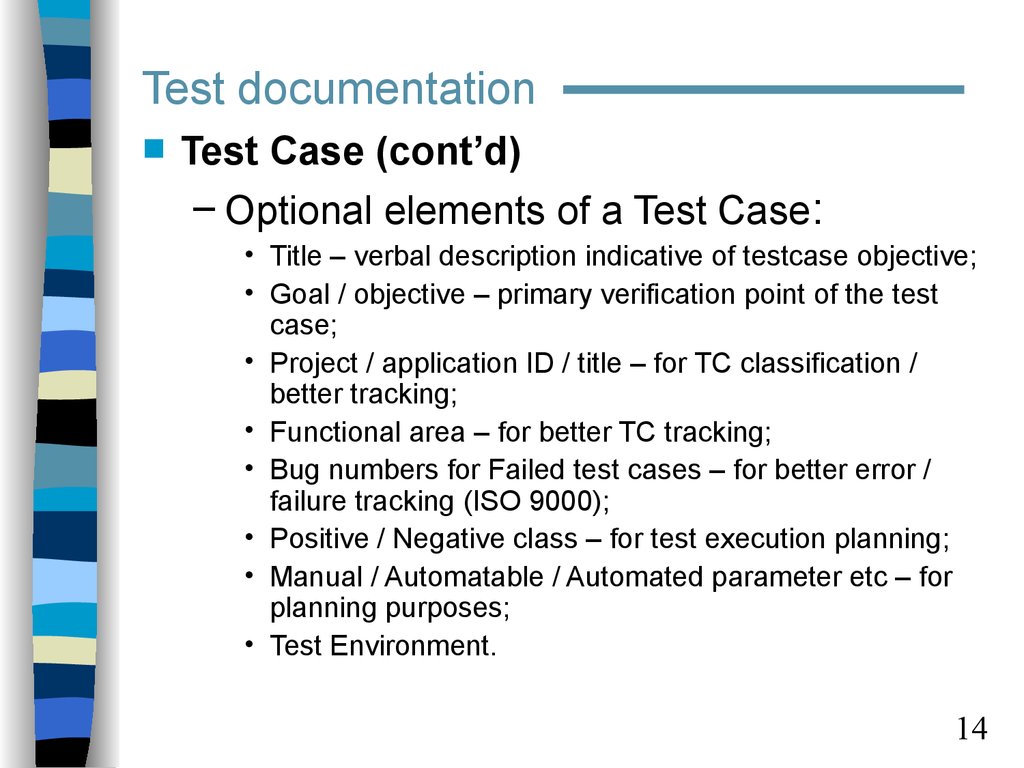

As the testing is conducted, test case status information should be recorded. This is usually done via a test management tool but can bedone by manual means if needed. Test case information can include:Test case creation status (e.g., designed, reviewed)Test case execution status (e.g., passed, failed, blocked, skipped)Test case execution information (e.g., dateand time, testername,data used)Test case execution artifacts(e.g., screen shots, accompanying logs)As with the identified risk items, the test cases should be mapped to the requirements items they test. It is important for the Test Analyst to remember that if test case Ais mapped to requirement A, and it is the only test case mapped to that requirement, then when test case A is executed and passes, requirement A will be considered to be fulfilled. This may or may not be correct. In many cases, more test cases are needed to thoroughly test a requirement, but because of limited time, only a subset of those tests isactually created. For example, if 20 test cases wereneeded to thoroughly test the implementation of a requirement, but only 10 were created and run, then therequirements coverage information will indicate 100% coverage when in fact only 50% coverage was achieved. Accurate tracking of the coverage as well as tracking the reviewed status of the requirements themselves can be used as a confidence measure.The amount (and level of detail) of information to be recorded depends on several factors, including the software development lifecycle model. For example, in Agileprojects typically less status information will be recorded due to the closeinteractionof the team and more face-to-face communication.

During test implementation, Test Analysts should finalize and confirm the order in which manual and automated tests are to be run, carefully checking for constraints that might require tests to be run in a particular order. Dependencies must be documented and checked. As specified above, test data is needed for testing, and in some cases these sets of data can be quite large. During implementation, Test Analysts create input and environment data to load into databases and other such repositories. Test Analysts also create data to be used with data-driven automation tests as well as for manual testing.

During test execution, test results must be logged appropriately. Tests which were run but for which results were not logged may have to be repeated to identify the correct result, leading to inefficiency and delays. (Note that adequate logging can address the coverage and repeatability concerns associated with test techniques such as exploratory testing.) Since the test object, testware, and test environments may all be evolving, logging should identify the specific versions testedas well as specific environment configurations. Test logging provides a chronological record of relevant details about the execution of tests.Results logging applies both to individual tests and to activities and events. Each test should be uniquely identified and its status logged as testexecution proceeds. Any events that affect the test execution should be logged. Sufficient information should be logged to measure test coverage and document reasons for delays and interruptions in testing. In addition, information must be logged to support test control, test progress reporting, measurement of exit criteria, and test process improvement. Logging varies depending on the level of testing and the strategy. For example, if automated component testing is occurring, the automated tests should produce most of the logging information. If manual testing is occurring, the Test Analyst will log the information regarding the test execution, often into a test management tool that will track the test execution information. In some cases, as with test implementation, the amount of test execution information that is loggedis influenced by regulatoryor audit requirements.

7.2.3.4 Improving the Success of the Automation Effort When determining which tests to automate,each candidate test case or candidate test suite must be assessedto see if it merits automation. Many unsuccessful automation projects are based on automating the readily available manual test cases without checkingthe actualbenefit from the automation. It may be optimal for a given set of test cases (a suite) to contain manual, semi-automated and fully automated tests.

During deployment of a test execution automation tool,it is not always wise to automate manual test cases as is, but to redefine the test cases for better automation use. This includes formatting the test cases, considering re-use patterns, expanding input by using variables instead of using hard-coded values and utilizing the full benefits of the test tool. Test execution tools usually have the ability to traverse multiple tests, group tests, repeat tests and change order of execution, all while providing analysis and reporting facilities.

7.2.3.5 Keyword-Driven Automation Keywords (sometimes referred to as action words) are mostly, but not exclusively,used to represent high-level business interactions with a system (e.g., “cancel order”). Each keyword is typically used to represent a number of detailed interactions between an actor andthe system under test. Sequences of keywords (including relevant test data) are used to specify test cases.[Buwalda01]In test automation a keyword is implemented as one or more executable test scripts. Tools read test cases written as a sequence ofkeywords that call the appropriate test scripts which implement thekeyword functionality. The scripts are implemented in a highly modular manner to enable easy mapping to specific keywords. Programming skills are needed to implement these modular scripts. The primary advantages of keyword-driven test automation ar

https://www.istqb.org/downloads/send/37-foundation-level-archive/208-ctfl-2018-syllabus.html

Test design During test design, the test conditions are elaborated into high-level test cases, sets of high-level test cases, and other testware. So, test analysis answers the question “what to test?” while test design answers the question “how to test?” Test design includes the following major activities:

Designing and prioritizing test cases and sets of test cases

Identifying necessary test data to support test conditions and test cases

Designing the test environment and identifying any required infrastructure and tools Capturing bi-directional traceability between the test basis, test conditions, test cases, and test procedures (see section 1.4.4)

Test implementation During test implementation, the testware necessary for test execution is created and/or completed, including sequencing the test cases into test procedures. So, test design answers the question “how to test?” while test implementation answers the question “do we now have everything in place to run the tests?” Test implementation includes the following major activities:

- Developing and prioritizing test procedures, and, potentially, creating automated test scripts

- Creating test suites from the test procedures and (if any) automated test scripts

- Arranging the test suites within a test execution schedule in a way that results in efficient test execution (see section 5.2.4)

- Building the test environment (including, potentially, test harnesses, service virtualization, simulators, and other infrastructure items) and verifying that everything needed has been set up correctly

- Preparing test data and ensuring it is properly loaded in the test environment

- Verifying and updating bi-directional traceability between the test basis, test conditions, test cases, test procedures, and test suites (see section 1.4.4)

Test design and test implementation tasks are often combined. In exploratory testing and other types of experience-based testing, test design and implementation may occur, and may be documented, as part of test execution. Exploratory testing may be based on test charters (produced as part of test analysis), and exploratory tests are executed immediately as they are designed and implemented (see section 4.4.2).

Test execution During test execution, test suites are run in accordance with the test execution schedule. Test execution includes the following major activities:

- Recording the IDs and versions of the test item(s) or test object, test tool(s), and testware

- Executing tests either manually or by using test execution tools

- Comparing actual results with expected results

- Analyzing anomalies to establish their likely causes (e.g., failures may occur due to defects in the code, but false positives also may occur [see section 1.2.3])

- Reporting defects based on the failures observed (see section 5.6)

- Logging the outcome of test execution (e.g., pass, fail, blocked)

- Repeating test activities either as a result of action taken for an anomaly, or as part of the planned testing (e.g., execution of a corrected test, confirmation testing, and/or regression testing)

- Verifying and updating bi-directional traceability between the test basis, test conditions, test cases, test procedures, and test results

Test completion Test completion activities collect data from completed test activities to consolidate experience, testware, and any other relevant information. Test completion activities occur at project milestones such as when a software system is released, a test project is completed (or cancelled), an Agile project iteration is finished (e.g., as part of a retrospective meeting), a test level is completed, or a maintenance release has been completed. Test completion includes the following major activities:

- Checking whether all defect reports are closed, entering change requests or product backlog items for any defects that remain unresolved at the end of test execution

- Creating a test summary report to be communicated to stakeholders

- Finalizing and archiving the test environment, the test data, the test infrastructure, and other testware for later reuse

- Handing over the testware to the maintenance teams, other project teams, and/or other stakeholders who could benefit from its use

- Analyzing lessons learned from the completed test activities to determine changes needed for future iterations, releases, and projects

- Using the information gathered to improve test process maturity

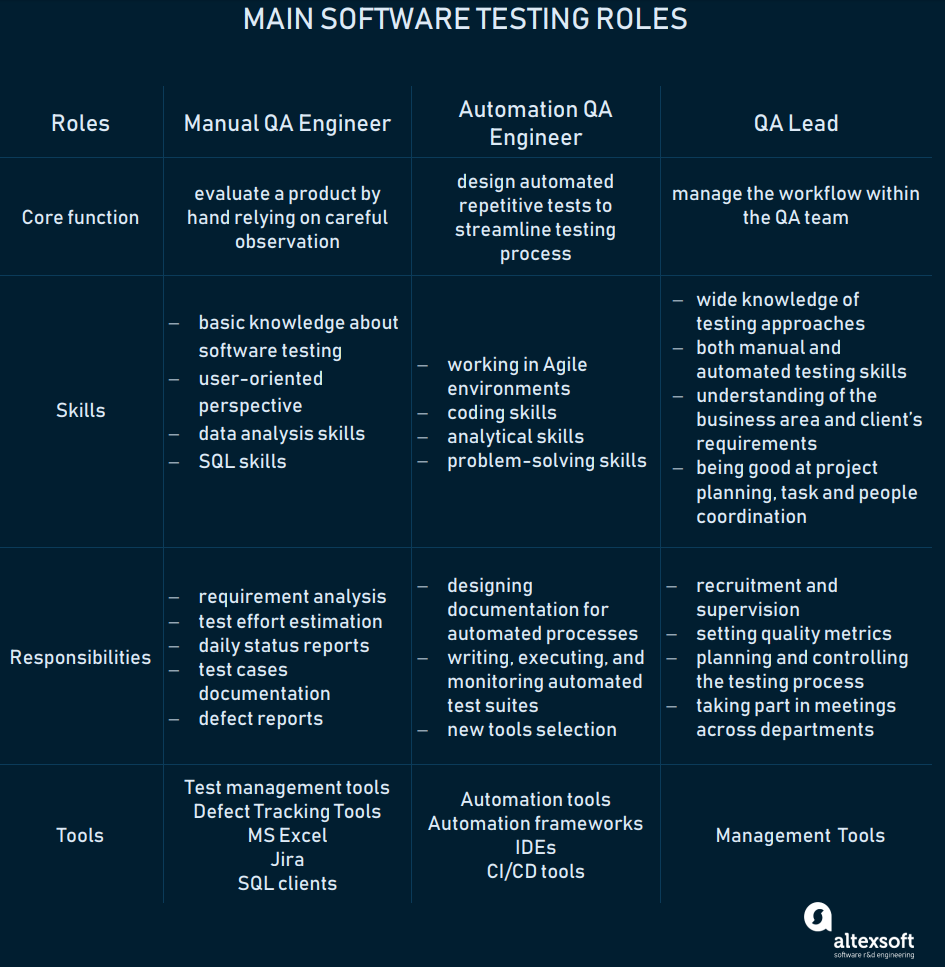

5.1.2 Tasks of a Test Manager and Tester (p65)

In this syllabus, two test roles are covered, test managers and testers. The activities and tasks performed by these two roles depend on the project and product context, the skills of the people in the roles, and the organization. The test manager is tasked with overall responsibility for the test process and successful leadership of the test activities. The test management role might be performed by a professional test manager, or by a project manager, a development manager, or a quality assurance manager. In larger projects or organizations, several test teams may report to a test manager, test coach, or test coordinator, each team being headed by a test leader or lead tester. Typical test manager tasks may include:

- Develop or review a test policy and test strategy for the organization

- Plan the test activities by considering the context, and understanding the test objectives and risks. This may include selecting test approaches, estimating test time, effort and cost, acquiring resources, defining test levels and test cycles, and planning defect management

- Write and update the test plan(s)

- Coordinate the test plan(s) with project managers, product owners, and others

- Share testing perspectives with other project activities, such as integration planning

- Initiate the analysis, design, implementation, and execution of tests, monitor test progress and results, and check the status of exit criteria (or definition of done)

- Prepare and deliver test progress reports and test summary reports based on the information gathered

- Adapt planning based on test results and progress (sometimes documented in test progress reports, and/or in test summary reports for other testing already completed on the project) and take any actions necessary for test control

- Support setting up the defect management system and adequate configuration management of testware

- Introduce suitable metrics for measuring test progress and evaluating the quality of the testing and the product

- Support the selection and implementation of tools to support the test process, including recommending the budget for tool selection (and possibly purchase and/or support), allocating time and effort for pilot projects, and providing continuing support in the use of the tool(s)

- Decide what should be automated, to what degree, and how

https://www.istqb.org/downloads/send/37-foundation-level-archive/3-foundation-level-syllabus-2011.html, pg48

- Decide about the implementation of test environment(s)

- Promote and advocate the testers, the test team, and the test profession within the organization

- Develop the skills and careers of testers (e.g., through training plans, performance evaluations, coaching, etc.)The way in which the test manager role is carried out varies depending on the software development lifecycle. For example, in Agile development, some of the tasks mentioned above are handled by the Agile team, especially those tasks concerned with the day-to-day testing done within the team, often by a tester working within the team. Some of the tasks that span multiple teams or the entire organization, or that have to do with personnel management, may be done by test managers outside of the development team, who are sometimes called test coaches. See Black 2009 for more on managing the test process.

Typical tester tasks may include:

- Review and contribute to test plans

- Analyze, review, and assess requirements, user stories and acceptance criteria, specifications, and models for testability (i.e., the test basis)

- Identify and document test conditions, and capture traceability between test cases, test conditions, and the test basis

- Design, set up, and verify test environment(s), often coordinating with system administration and network management

- Design and implement test cases and test procedures

- Prepare and acquire test data

- Create the detailed test execution schedule

- Execute tests, evaluate the results, and document deviations from expected results

- Use appropriate tools to facilitate the test process

- Automate tests as needed (may be supported by a developer or a test automation expert)

- Evaluate non-functional characteristics such as performance efficiency, reliability, usability, security, compatibility, and portability

- Review tests developed by others People who work on test analysis, test design, specific test types, or test automation may be specialists in these roles. Depending on the risks related to the product and the project, and the software development lifecycle model selected, different people may take over the role of tester at different test levels. For example, at the component testing level and the component integration testing level, the role of a tester is often done by developers. At the acceptance test level, the role of a tester is often done by business analysts, subject matter experts, and users. At the system test level and the system integration test level, the role of a tester is often done by an independent test team. At the operational acceptance test level, the role of a tester is often done by operations and/or systems administration staff.

https://www.istqb.org/downloads/send/37-foundation-level-archive/208-ctfl-2018-syllabus.html

5.1.2 Tasks of a Test Manager and Tester

In this syllabus, two test roles are covered, test managers and testers. The activities and tasks performed by these two roles depend on the project and product context, the skills of the people in the roles, and the organization. The test manager is tasked with overall responsibility for the test process and successful leadership of the test activities. The test management role might be performed by a professional test manager, or by a project manager, a development manager, or a quality assurance manager. In larger projects or organizations, several test teams may report to a test manager, test coach, or test coordinator, each team being headed by a test leader or lead tester. Typical test manager tasks may include:

- Develop or review a test policy and test strategy for the organization

- Plan the test activities by considering the context, and understanding the test objectives and risks. This may include selecting test approaches, estimating test time, effort and cost, acquiring resources, defining test levels and test cycles, and planning defect management

- Write and update the test plan(s)

- Coordinate the test plan(s) with project managers, product owners, and others

- Share testing perspectives with other project activities, such as integration planning

- Initiate the analysis, design, implementation, and execution of tests, monitor test progress and results, and check the status of exit criteria (or definition of done)

- Prepare and deliver test progress reports and test summary reports based on the information gathered

- Adapt planning based on test results and progress (sometimes documented in test progress reports, and/or in test summary reports for other testing already completed on the project) and take any actions necessary for test control

- Support setting up the defect management system and adequate configuration management of testware

- Introduce suitable metrics for measuring test progress and evaluating the quality of the testing and the product

- Support the selection and implementation of tools to support the test process, including recommending the budget for tool selection (and possibly purchase and/or support), allocating time and effort for pilot projects, and providing continuing support in the use of the tool(s)

- Decide about the implementation of test environment(s)

- Promote and advocate the testers, the test team, and the test profession within the organization

- Develop the skills and careers of testers (e.g., through training plans, performance evaluations, coaching, etc.) The way in which the test manager role is carried out varies depending on the software development lifecycle. For example, in Agile development, some of the tasks mentioned above are handled by the Agile team, especially those tasks concerned with the day-to-day testing done within the team, often by a tester working within the team. Some of the tasks that span multiple teams or the entire organization, or that have to do with personnel management, may be done by test managers outside of the development team, who are sometimes called test coaches. See Black 2009 for more on managing the test process. Typical tester tasks may include:

- Review and contribute to test plans

- Analyze, review, and assess requirements, user stories and acceptance criteria, specifications, and models for testability (i.e., the test basis)

- Identify and document test conditions, and capture traceability between test cases, test conditions, and the test basis

- Design, set up, and verify test environment(s), often coordinating with system administration and network management

- Design and implement test cases and test procedures

- Prepare and acquire test data

- Create the detailed test execution schedule

- Execute tests, evaluate the results, and document deviations from expected results

- Use appropriate tools to facilitate the test process

- Automate tests as needed (may be supported by a developer or a test automation expert)

- Evaluate non-functional characteristics such as performance efficiency, reliability, usability, security, compatibility, and portability

- Review tests developed by others People who work on test analysis, test design, specific test types, or test automation may be specialists in these roles. Depending on the risks related to the product and the project, and the software development lifecycle model selected, different people may take over the role of tester at different test levels. For example, at the component testing level and the component integration testing level, the role of a tester is often done by developers. At the acceptance test level, the role of a tester is often done by business analysts, subject matter experts, and users. At the system test level and the system integration test level, the role of a tester is often done by an independent test team. At the operational acceptance test level, the role of a tester is often done by operations and/or systems administration staff.

https://www.mindfulqa.com/qa-best-practices/

https://moduscreate.com/blog/using-jira-sub-tasks-for-qa-workflows/

- Log in to post comments